44 confident learning estimating uncertainty in dataset labels

Data Noise and Label Noise in Machine Learning - Medium Uncertainty Estimation This is not really a defense itself, but uncertainty estimation yields valuable insights in the data samples. Aleatoric, epistemic and label noise can detect certain types of data and label noise [11, 12]. Are Label Errors Imperative? Is Confident Learning Useful? Confident learning (CL) is a class of learning where the focus is to learn well despite some noise in the dataset. This is achieved by accurately and directly characterizing the uncertainty of label noise in the data. The foundation CL depends on is that Label noise is class-conditional, depending only on the latent true class, not the data 1.

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for character- izing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate...

Confident learning estimating uncertainty in dataset labels

Confident Learning: : Estimating ... approaches to generalize confident learning (CL) for this purpose. Estimating the joint distribution is challenging as it requires disambiguation of epistemic uncertainty (model predictedprobabilities)fromaleatoricuncertainty(noisylabels)(ChowdharyandDupuis, 2013), but useful because its marginals yield important statistics used in the literature, On Learning Under Dataset Noise - Guanlin Li Confident Learning: Estimating Uncertainty in Dataset Labels, which has submitted to AISTATS 2020. This is a method paper for empirical improvement. Find label issues with confident learning for NLP In this article I introduce you to a method to find potentially errorously labeled examples in your training data. It's called Confident Learning. We will see later how it works, but let's look at the data set we're gonna use. import pandas as pd import numpy as np Load the dataset

Confident learning estimating uncertainty in dataset labels. Confident Learning: Estimating Uncertainty in Dataset Labels by C Northcutt · 2021 · Cited by 202 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in ... Learning with Neighbor Consistency for Noisy Labels | DeepAI Recent advances in deep learning have relied on large, labelled datasets to train high-capacity models. However, collecting large datasets in a time- and cost-efficient manner often results in label noise. We present a method for learning from noisy labels that leverages similarities between training examples in feature space, encouraging the prediction of each example to be similar to its ... Chipbrain Research | ChipBrain | Boston Confident Learning: Estimating Uncertainty in Dataset Labels By Curtis Northcutt, Lu Jiang, Isaac Chuang. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and ... Tag Page - L7 An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets. This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. machine-learning confident-learning noisy-labels deep-learning.

cleanlab · PyPI Fully characterize label noise and uncertainty in your dataset. s denotes a random variable that represents the observed, ... {Confident Learning: Estimating Uncertainty in Dataset Labels}, author={Curtis G. Northcutt and Lu Jiang and Isaac L. Chuang}, journal={Journal of Artificial Intelligence Research (JAIR)}, volume={70}, pages={1373--1411 ... Confident Learning - CL - 置信学习 · Issue #795 · junxnone/tech-io · GitHub Reference paper - 2019 - Confident Learning: Estimating Uncertainty in Dataset Labels ImageNet 存在十万标签错误,你知道吗 ... [R] Announcing Confident Learning: Finding and Learning with Label ... Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

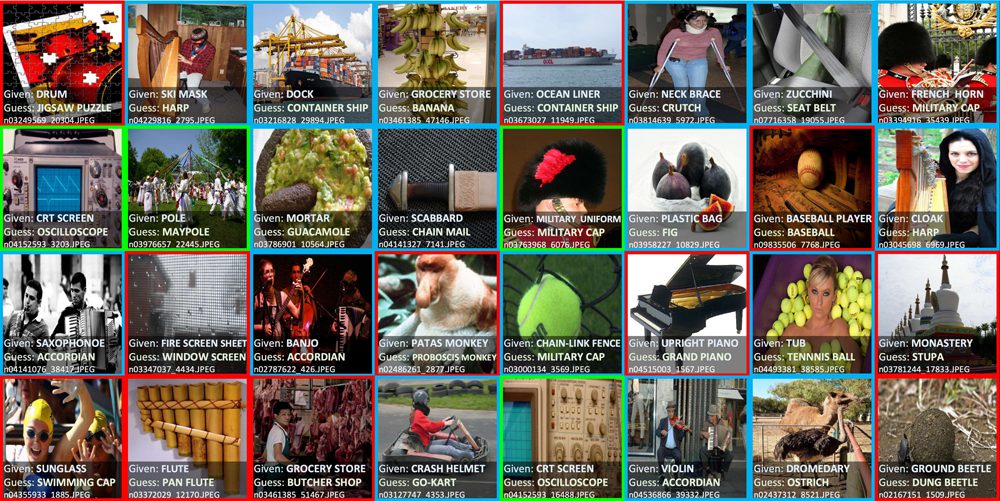

Noisy Labels are Treasure: Mean-Teacher-Assisted Confident Learning for ... Specifically, with the adapted confident learning assisted by a third party, i.e., the weight-averaged teacher model, the noisy labels in the additional low-quality dataset can be transformed from 'encumbrance' to 'treasure' via progressive pixel-wise soft-correction, thus providing productive guidance. Extensive experiments using two ... Calmcode - bad labels: Prune We can also use cleanlab to help us find bad labels. Cleanlab offers an interesting suite of tools surrounding the concept of "confident learning". The goal is to be able to learn with noisy labels and it also offers features that help with estimating uncertainty in dataset labels. Note this tutorial uses cleanlab v1. The code examples run, but ... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence.

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data,...

Confident Learning: Estimating Uncertainty in Dataset Labels by C Northcutt · 2021 · Cited by 202 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on ...

Characterizing Label Errors: Confident Learning for Noisy-Labeled Image ... 2.2 The Confident Learning Module. Based on the assumption of Angluin , CL can identify the label errors in the datasets and improve the training with noisy labels by estimating the joint distribution between the noisy (observed) labels \(\tilde{y}\) and the true (latent) labels \({y^*}\). Remarkably, no hyper-parameters and few extra ...

Finding and Learning with Label Errors in Datasets I recommend mapping the labels to 0, 1, 2. Then after training, when you predict, you can type classifier.predict_proba () and it will give you the probabilities for each class. So an example with 50% probability of class label 1 and 50% probability of class label 2, would give you output [0, 0.5, 0.5]. Chanchana Sornsoontorn • 2 years ago

Uncertainty-Aware Learning against Label Noise on Imbalanced Datasets First, epistemic uncertainty-aware class-specific noise modeling is performed to identify trustworthy clean samples and refine/discard highly confident true/corrupted labels, and aleatoric uncertainty is introduced in the subsequent learning process to prevent noise accumulation in the label noise modeling process. Learning against label noise is a vital topic to guarantee a reliable ...

![[PDF] Paying down metadata debt: learning the representation of concepts using topic models ...](https://d3i71xaburhd42.cloudfront.net/4301d8d1d5a4c35b1ac7412162f4eefa3d0ebbe9/6-Figure1-1.png)

Post a Comment for "44 confident learning estimating uncertainty in dataset labels"